Snap’s latest play in big tech’s race to produce AR glasses

Snap recently released its fourth-generation spectacle glasses. The release marks the latest upgrade in Snap’s line of Spectacles, following its previous version, Spectacles 3, launched in 2020.

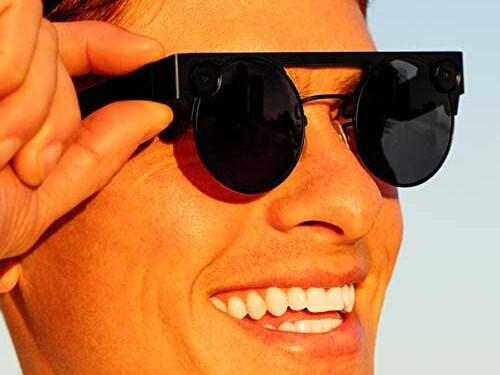

Image: Snap

Imagine you are sitting on your couch on a rainy day, you snap on your augmented reality (AR) glasses, and suddenly you are transported to a sunny green hillside with a purple butterfly landing in the palm of your outstretched hand.

Seem crazy? Well, Snap recently released its fourth-generation spectacle glasses that make (virtual) transportations like this happen. This release marks the latest upgrade in Snap’s line of Spectacles, following its previous version, Spectacles 3, which launched in 2020. The new frames feature two front-facing cameras, four built-in microphones, two stereo speakers and a voice-controlled, built-in touchpad.

“So how realistic can these AR experiences really be?” you may ask. The two front-facing cameras help the glasses detect the objects and surfaces that you’re looking at, so the graphics interact more naturally with the world around you.

The catch?

Unlike past models, Snap’s fourth generation of spectacles aren’t available for consumers just yet. At its virtual Partner Summit for developers in May, Snap announced it will give them directly to AR effects creators through an online application program. Why? Both the product and the consumer aren’t ready for AR glasses because:

Consumers won’t buy products unless there is a use case. Consumers will buy the spectacles for pure entertainment, like why they buy TVs and video games. But to reach the mass market, it will likely take more than pure entertainment. Snap will have more success (and consumers will pay more money) if it launches with content and services, so it makes sense that Snap is releasing this product to 200,000 people who already make AR effects in Snapchat to experiment with creating experiences for the new Spectacles.

There isn’t yet a strong consumer demand for AR glasses. The act of moving and consuming 21st-century digital media simultaneously is an untested use case. I wrote about my experience wearing FORM Smart Swim Goggles, an example of an AR headset. I found that while swimming in the goggles, my vision was a little obscured given the in-goggle display that showed how fast I was swimming. While this AR headset feels safe for a pool, I am not sure how I would feel if I were out skiing, jogging or cycling.

AR glasses depend on connectivity and battery life. Even though these glasses present a hands-free experience, they will need a smartphone for connectivity like 5G. Faster than 4G and better than today’s best Wi-Fi, 5G has more than enough bandwidth to power most AR experiences. Additionally, these glasses need a source of power. One possibility is a battery within the glasses, but that would mean additional weight. As of now, the glasses weigh 134 grams, which is more than double the weight of its Spectacles 3. In terms of battery life, the current battery only lasts for 30 minutes. By comparison, my FORM swim goggles can last for up to 15 hours of swimming.

What other steps is Snap taking to make AR a reality?

Snap is:

- Purchasing IP and talent. In May, Snap announced it is buying its UK display supplier WaveOptics for $500 million, its largest deal yet. This supplier primarily makes waveguides, a display technology that allows for the overlaying of virtual objects on the real world through a transparent surface like glass and accompanying light projectors. This strategic move matches those by Snap’s large tech competitors, such as Apple’s 2018 acquisition of Akonia.

- Funding the Ghost AR Innovation Lab. Snap announced plans to fund a $3.5 million AR innovation lab, with additional funding from Verizon for building 5G AR experiences. The partnership benefits both enterprises. The 5G business case lacks strong consumer demand today other than as a home broadband alternative. The internet-of-things products and autonomous vehicles that will fully leverage 5G still lie in the future.

- Putting devices into the hands of developers. Snap is tapping into its community of over 200,000 Lens creators, developers, and partners who are already building millions of AR experiences in Snap’s Lens Studio. Developers won’t have to purchase them, but they will have to apply to receive a pair. Snap’s hope is to encourage this community to experiment with creating Lenses for the Spectacles and, in doing so, create buzz for the glasses.

What Is Waveguide technology, and why is it such a big deal?

Waveguide technology is essential for AR glasses because it shrinks the hardware and delivers visual experiences that will wow consumers.

- Optical waveguide is considered the best choice. It is thin and light in nature and is comprised of micro-display and imaging optics. The imaging system requires what’s called an optical combiner in order to reflect a virtual image while transmitting external light to the human eye. This results in the appearance of virtual content on top of the real landscape.

- There is still work to be done. Waveguide technology is bulky, heavy, and expensive—key barriers that stand in the way of AR glasses becoming consumer-ready.

This post was written by Vice President and Principal Analyst Julie Ask, and it originally appeared here.