Reinforcement Learning Progress

Reinforcement Learning Progress: A Thriving Landscape

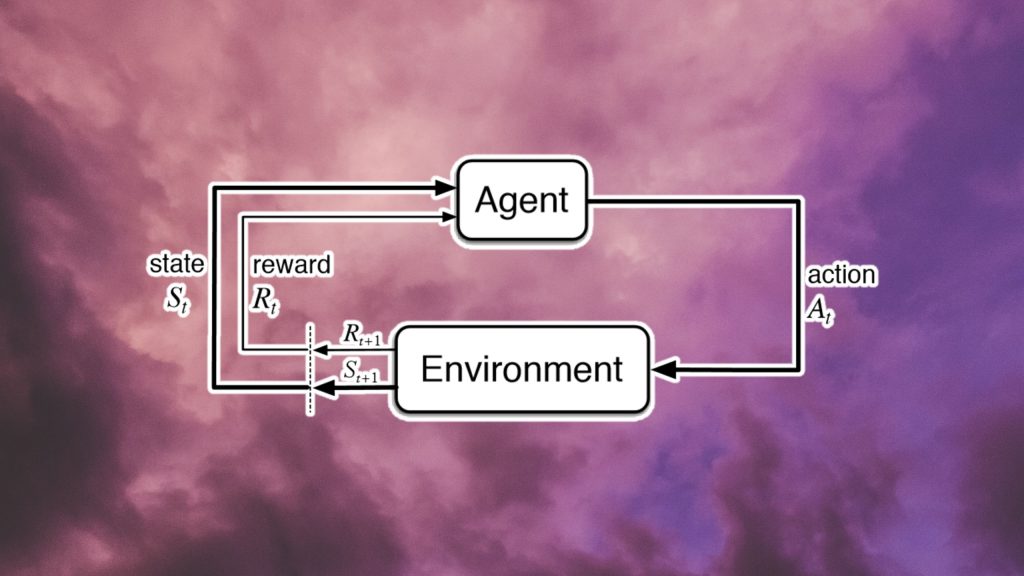

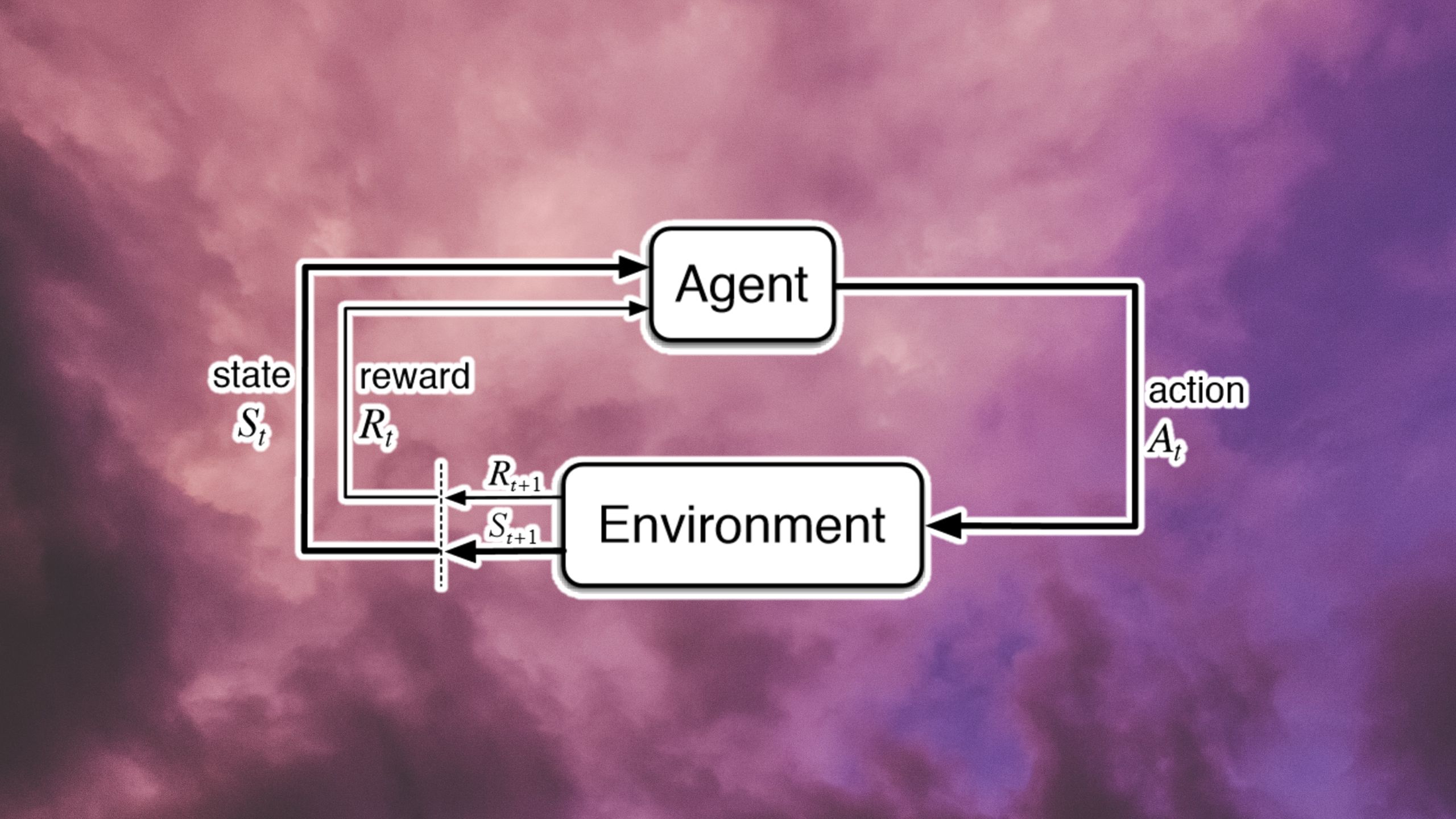

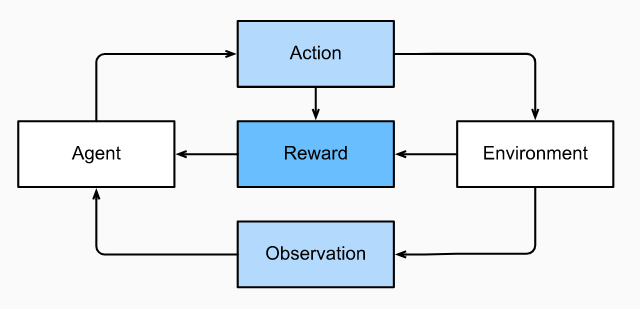

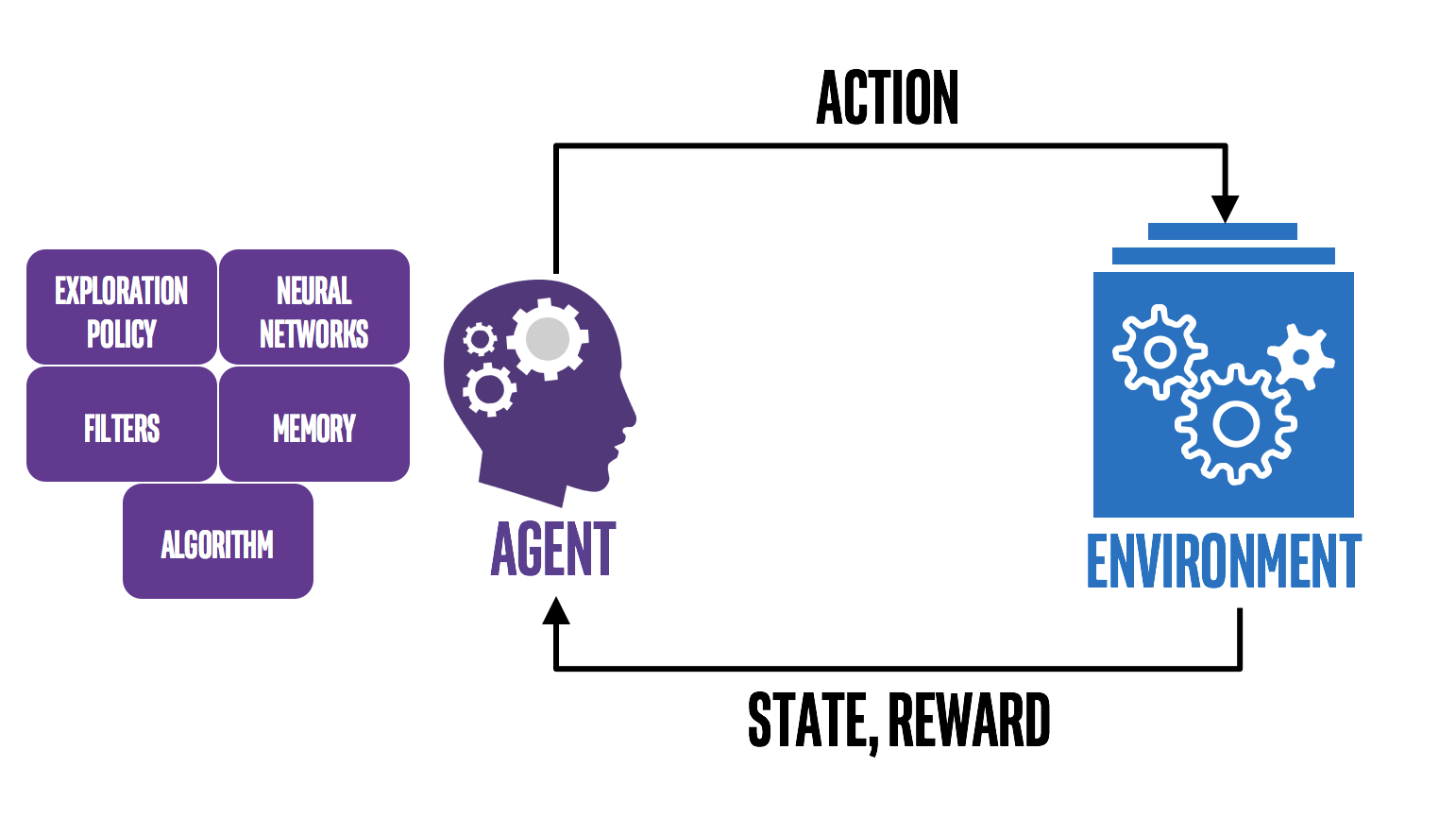

Reinforcement learning (RL) has made significant strides in recent years, evolving from a theoretical concept to a powerful tool with real-world applications. Here’s a breakdown of the progress happening:

Sample Efficiency: One of the most significant advancements is increased sample efficiency. RL agents now require fewer interactions with their environment to learn optimal behavior. This translates to faster training times and reduced costs, especially for resource-intensive tasks. Recent research suggests doubling times of around 10-18 months on Atari games and 5-24 months on continuous control tasks, showcasing exponential progress.

Algorithmic Developments: New algorithms are continuously pushing the boundaries of what RL can achieve. Techniques like Proximal Policy Optimization (PPO) and multi-agent reinforcement learning are enabling agents to handle complex, dynamic environments and collaborate effectively. These advancements are opening doors for real-world applications in robotics, game playing, and even resource management.

Real-World Applications: RL is no longer confined to simulations and games. Real-world deployments are on the rise, showcasing the technology’s potential in various domains. From optimizing traffic flow in smart cities to managing energy grids and controlling robots in hazardous environments, RL is making a tangible impact.

Challenges and Opportunities: Despite the progress, challenges remain. Scaling RL to complex real-world tasks requires addressing issues like safety, interpretability, and robustness to changing environments. Additionally, bridging the gap between simulated and real-world performance remains a hurdle.

Overall, the field of reinforcement learning is experiencing exciting progress. Advances in sample efficiency, algorithms, and real-world applications make it a vibrant and promising area with immense potential for shaping the future of artificial intelligence.

Here are some additional points to consider:

- The rise of deep learning has significantly bolstered RL’s capabilities, particularly in tasks involving visual inputs and sensor data.

- The open-source community plays a crucial role in sharing code, best practices, and benchmarks, accelerating progress and democratizing access to RL tools.

- Ethical considerations around RL applications are becoming increasingly important, prompting discussions about transparency, fairness, and accountability.

Remember, this is just a brief overview. If you’d like to delve deeper into specific aspects of RL progress, feel free to ask!

Today, OpenAI released a new result. We used PPO (Proximal Policy Optimization), a general reinforcement learning algorithm invented by OpenAI, to train a team of 5 agents to play Dota and beat semi-pros.

This is the game that to me feels closest to the real world and complex decision making (combining strategy, tactics, coordinating, and real-time action) of any game AI had made real progress against so far.

The agents we train consistently outperform two-week old agents with a win rate of 90-95%. We did this without training on human-played games—we did design the reward functions, of course, but the algorithm figured out how to play by training against itself.

This is a big deal because it shows that deep reinforcement learning can solve extremely hard problems whenever you can throw enough computing scale and a really good simulated environment that captures the problem you’re solving. We hope to use this same approach to solve very different problems soon. It’s easy to imagine this being applied to environments that look increasingly like the real world.

There are many problems in the world that are far too complex to hand-code solutions for. I expect this to be a large branch of machine learning, and an important step on the road towards general intelligence.