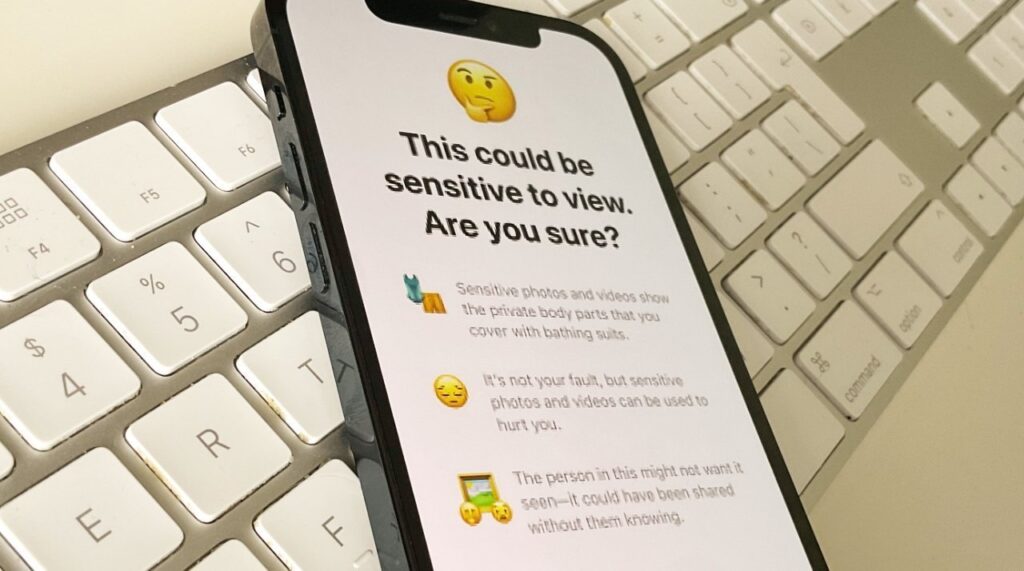

In the wake of reports that dozens of teen boys have died by suicide since 2021 after being victimized by blackmailers, Apple is relying on its Communication Safety features to help protect potential victims — but some critics say it isn’t enough.

Apple’s Communication Safety features can be turned on by parents for a child account.

The number of cases of blackmailed teens being preyed on by scammers who either tricked the victims into providing explicit images — or who simply made fake images utilizing AI for blackmailing purposes — is rising. The outcomes are often tragic.

A new report from the Wall Street Journal has profiled a number of young victims that ultimately ended their lives rather than face the humiliation of real or faked explicit images of themselves becoming public. This content is known as Child Sexual Abuse Material, or CSAM.

Continue Reading on AppleInsider | Discuss on our Forums